I have been meaning to write a blog series on NSX-T Installation for some time now but work and related travel have taken quite a precedence. However, this Christmas break have given me the opportunity to compose a “step-by-step” series, to help the wider vCommunity.

As the title suggests, this blog is relevant for the SDN aspirants, to help prepare nested ESXi Host’s networking for NSX-T environment. I will briefly go through my home lab setup which can either be replicated to run everything nested or tweaked according to the number of physical servers and switches you may have.

I am using a single Physical server – “Xeon E5-2630L v4”, “192 Gb” of Ram and a mixture of locally installed NVMe, SSD and spinning disks, and eight physical nics, which suffice all requirements (thus far :-)) for running everything nested.

I also have a Cisco SG350 managed switch – which I have decided, not to utilize as it is not feasible for many individuals to invest in additional networking kit, due to increased costs. If you are following my “step-by-step” NSX-T installation series, Step 0 talks about the “High Level Design” i.e. what we are going to achieve” including a quick tip of what I am using to simulate the physical router.

I like to follow the VMware VVD standards even in my home lab; therefore, I have a Management/Edge collapse cluster and relevant Computer Cluster(s).

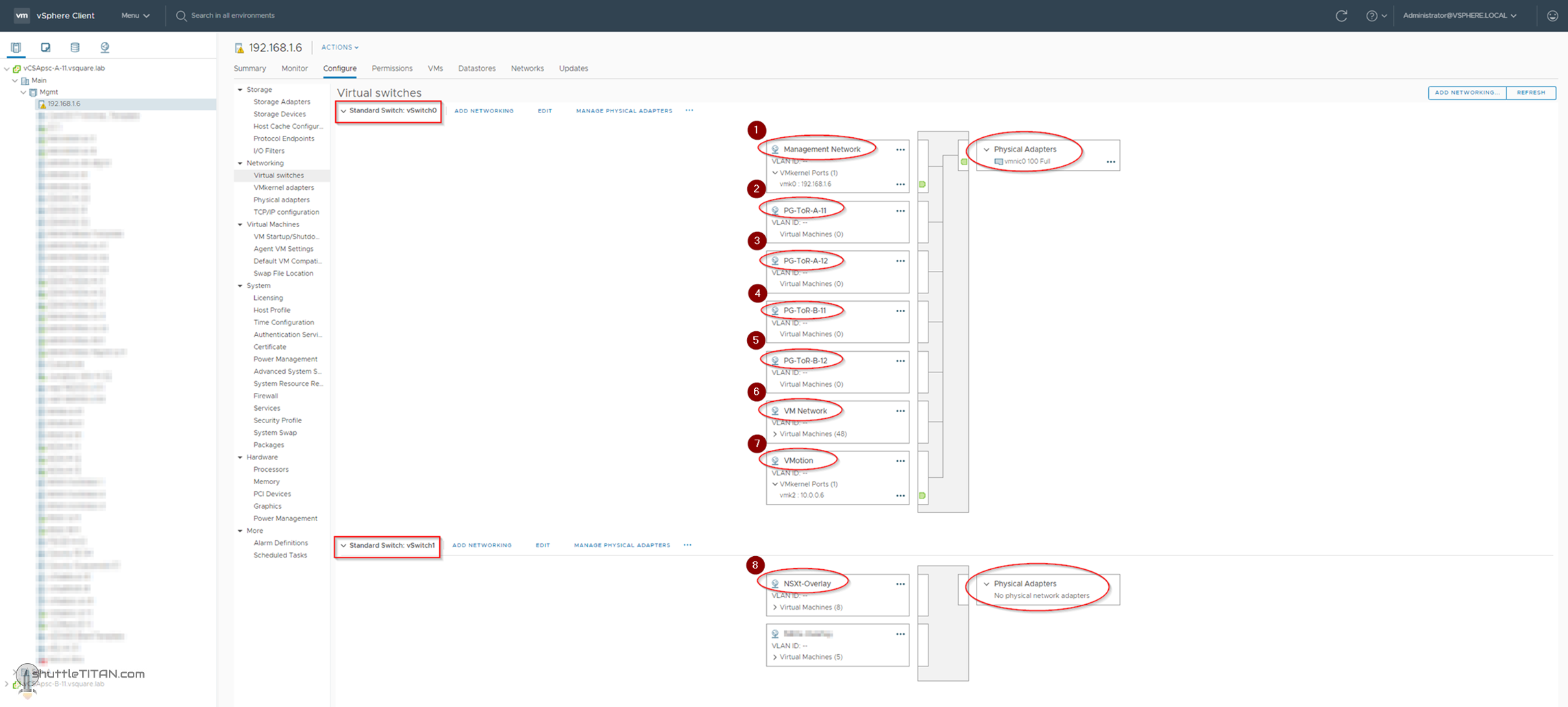

Management Cluster – A single physical server described above have two standard switches, configured with the respective port groups as follows:

| vSwitch | PortGroup | Details/Relevance |

|---|---|---|

| vSwitch0 (1 physical nic) | Management Network | Physical ESXi Host |

| VMotion | VMotion Network | |

| VM Network | Utilized by all VMs i.e. vCenter Servers, NSX-T Management Cluster, ESXi VMs (nested), and others | |

| PG-ToR-A-11 | Site A Top of the rack switch network | |

| PG-ToR-A-12 | Site A Top of the rack switch network | |

| PG-ToR-B-11 | Site B Top of the rack switch network | |

| PG-ToR-B-12 | Site B Top of the rack switch network | |

| vSwitch1 (no physical nics) | NSX-T Overlay | Geneve Encapsulated Traffic |

Note: “vSwitch1” does not have any physical nics assigned, where as “vSwitch0” has only 1 (could have more but as everything is running nested it should suffice). The image below will help visualize the setup above:

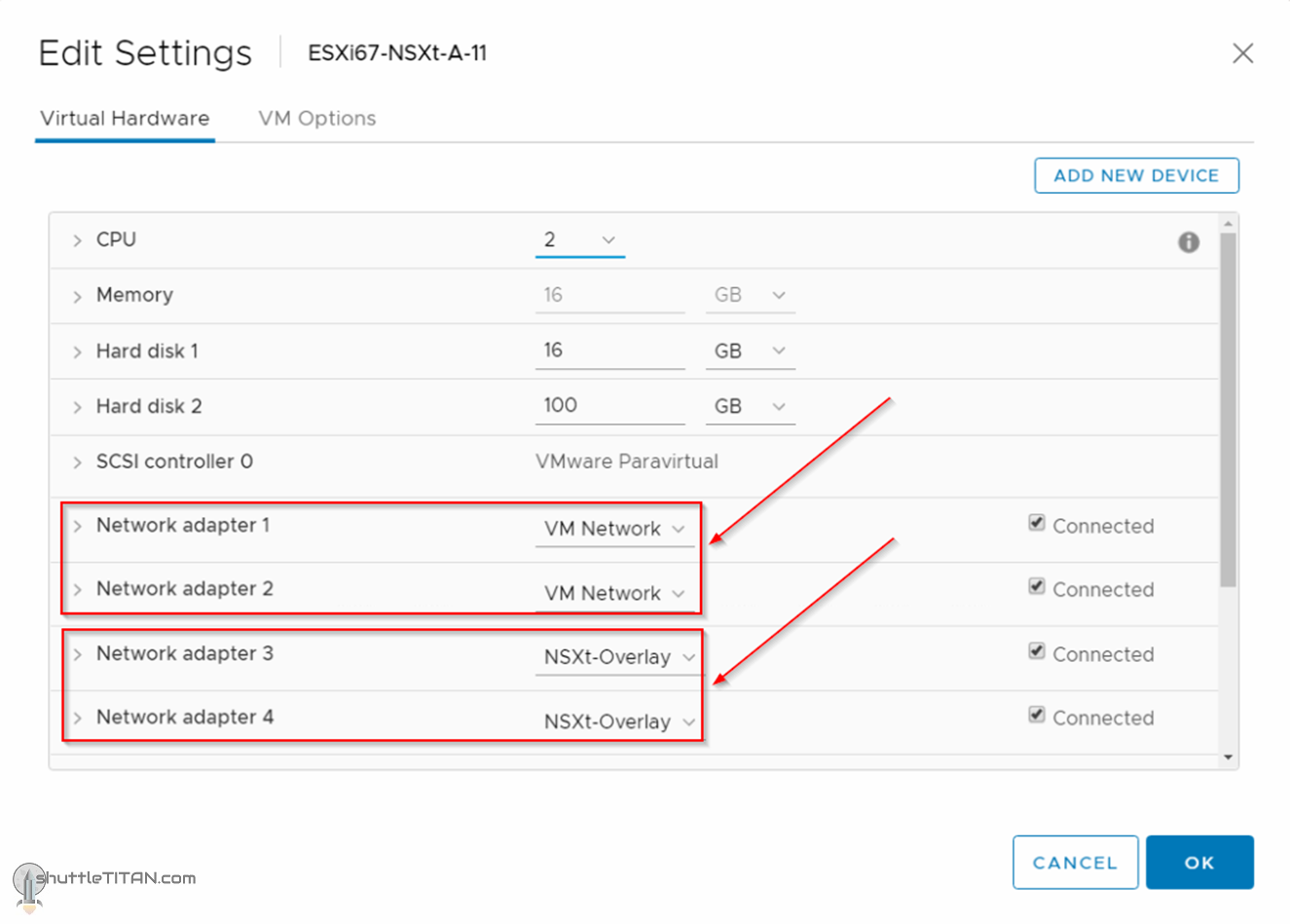

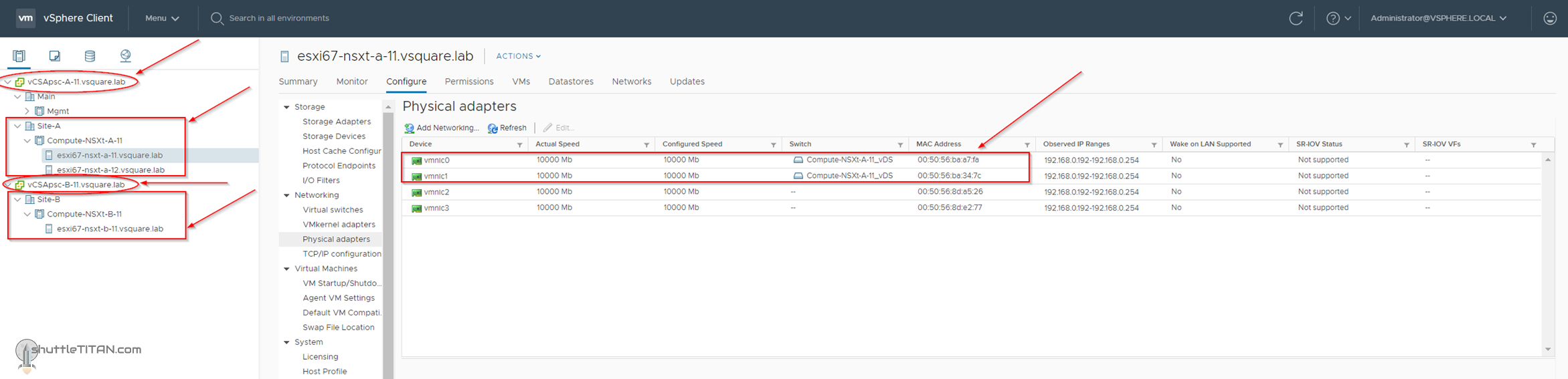

Compute Clusters – There are two compute clusters, one in each site. All ESXi Hosts (in the respective compute clusters in each site), are hosted as VMs in the “Mgmt” Cluster (one physical ESXi Host, as described before). Every compute ESXi VM has 4 nics, the first two are connected to the “VM Network” and the remaining two to the “NSXt-Overlay” port group, as illustrated in the image below:

The Compute ESXi Hosts are added to the respective Site A/B vCenter Server’s compute clusters.

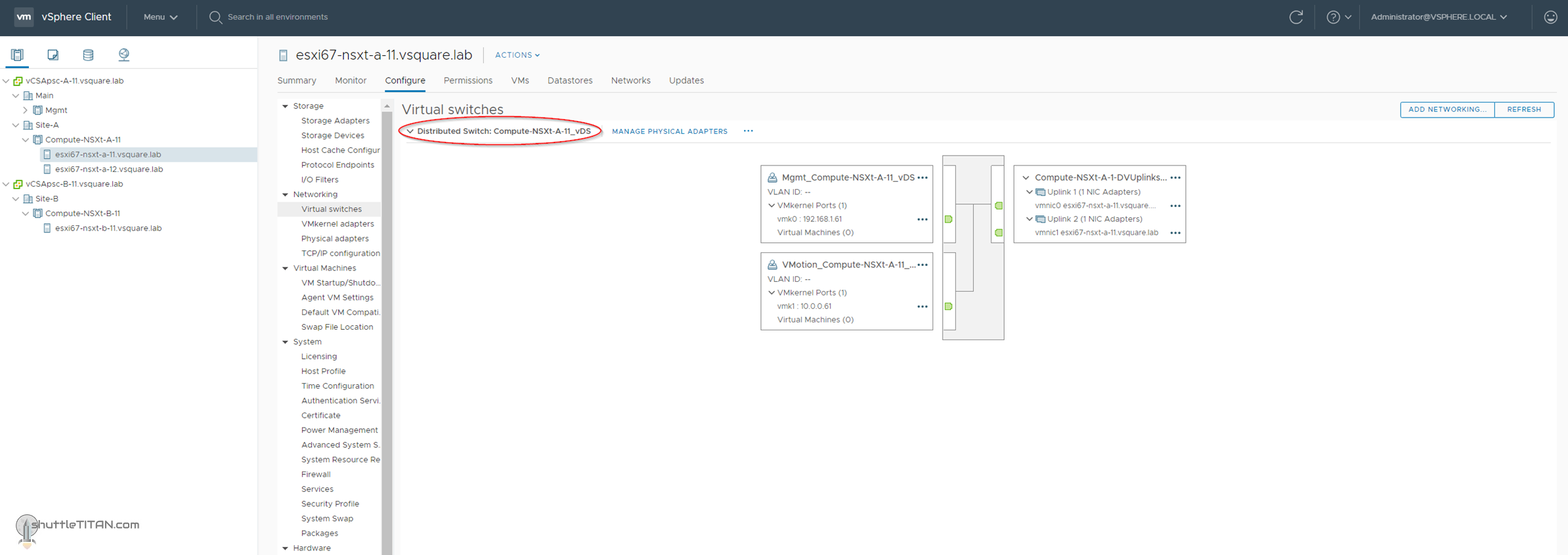

Site A utilize the “Compute-NSXt-A-11_vDS” for the first two nics and remaining two are unused (utilized by N-VDS later, in the “step-by-step” NSX-T Installation series):

The port groups configured under the “Compute-NSXt-A-11_vDS” are for Mgmt and VMotion network traffic, as illustrated in the image below:

The Images depicted above are only from Site-A ESXi Hosts. Site-B ESXi Host’s networking is exactly the same but with different naming convention as identifier e.g. “Compute-NSXt-B-11_vDS”, etc.

This completes the lab setup for vSphere and the nested ESXi Host networking. I hope this was informative, next – let’s take look at the NSX-T Installation Series: Step 0 – High Level Design

Hi Varun,

First, excellent blog series. I have yet gone through all posts. But I’m highly impressed with your idea of sharing Lab Layout. Very few people do it.

After reading this blog post and second blog (following this) I could not get the IP Address scheme you have allocated to ESXi host. is it 192.168.1.x.

And If I have correctly understood vCenter and all Vms are deployed on VM network port group. But in the next blog post I see you have attached them to PG-ToR-A-11. Or I’m missing something. I’m really bad in reading logical diagrams. I must admit.

Would be really nice, if you could assist me with the query above. I have also created schematic (the way I understood) but could not find a better way to share with you.

Hi Preetam,

Thanks for reading, I am glad to hear that this blog series is helping aspirants like you to get started with NSX-T.

Correct, all ESXi Hosts i.e. Physical and Nested are on 192.168.1.x along with the management VMs i.e. vCenter, NSX-T Managers and the Client.

The “PG-ToR-A-11” portgroup is only relevant and used by the EDGE VM, mentioned in Step 10 (Option2) / Step 11.

Hello Varun,

This is an excellent blog series, One quick question. I am deploying a fully collapsed cluster, Can we use one physical nic to install and configure NSX-T environment and later attach other physical nic ? As I have Production workload already running on the cluster which have only 4 Physical nic, 2 for Production and 2 for Mgmt, vmotion and vSAN, our plan is to not move mgmt traffic from vDS.

I would really appreciate your response.

Thank you.

Just a quick but big Thank You for the detailed explanations

I was starting with NSX and had issues with my nested lab, followed your instructions and everything is working

Keep up the good work and thanks again