One of my colleagues asked me about “Bifurcation” when it came in a discussion about running multiple NVMe drives from a single PCIe slot. As I explained “what it is” and why one should consider it before making a motherboard purchase, I shared my own home lab experience – which brought me to write this blog for the wider community.

Background: I had multiple disks in my Supermicro server – three(3) NVMe SSD, two(2) SSDs and two(2) HDDs. One of my SATA HDDs (2TB in size) decided to go kaput recently. I thought of replacing it with an equivalent size internal SATA SDDs to keep the expense low. Checking pricing on Amazon, 2TB SSDs were somewhere in the range of £168 to £200 and somehow I stumbled upon the WD Blue SN550 NVMe SSD 1TB for just £95 – I remember when I bought my first Samsung EVO 960 1Tb NVMe for about £435 in September 2017 and the same brand/model for 2TBs were around a grand!

The read and write speed of 545 MB/s and 540 MB/s respectively, of the fastest 2TB SATA SSD i.e. SanDisk SSD Plus (in the price bracket mentioned above) was no comparison to the WD Blue SN550 NVMe SSD’s 2400 MB/s and 1950 MB/s respectively. As I was already using 3 NVMe SSDs, I loved my nested VMware vSphere (ESXi) home lab run “smooth as butter”, it became a no brainer for me to consider buying two(2) x 1TBs WD NVMe SSD instead. Of course, I would have to invest additional money to buy more NVMe PCIe adapters, but I would rather spend a little more money now for future proofing my home lab/server along with the additional speedbump ;).

The PCIe based peripherals in my home lab are Supermicro Quad Port NIC card, Nvidia GPU and Samsung NVMe SSDs. This blog will focus on NVMe SSDs and the GPU as an example, covering the following:

- What is PCIe Bifurcation?

- Interpretation of an example motherboard layout and its architecture

- Limitations of the example motherboard

- Understand default PCIe slot behaviour with Dual NVMe PCIe adapter

- How to enable PCIe Bifurcation?

- Optimal PCIe Bifurcation configurations – three(3) use cases

Before we begin, let’s get the dictionary definition out of the way:

What is PCIe Bifurcation?

PCIe bifurcation is no different to the definition i.e. dividing the PCIe slot in smaller chunks/branches. Example, a PCIe x8 card slot could be bifurcated into two(2) x4 chunks or a PCIe x16 into four(4) x4 i.e. x4x4x4x4 OR two(2) x8 i.e. x8x8 OR one(1) x8 and two(2) x4 i.e. x8x4x4 / x4x4x8 (if it does not make sense now, it will later – keep reading

Note: PCIe Bifurcation does not decrease speed but only splits/bifurcate lanes. In order to use bifurcation, the motherboard should support it and if it does then the BIOS should support it as well.

When I bought the Supermicro X10SRH-CLN4F motherboard in September 2017, it came with BIOS 2.0b, which did not have any “Bifurcation” options and as a result when I plugged in my a Supermicro AOC-SLG3-2M2 (Dual NVMe PCIe Adapter) in any slot, it would only detect one(1) of the two(2) NVMe SSDs installed. To get the card to detect both the NVMe SSDs, “PCIe Bifurcation” was required which was available in a later BIOS version not publicly available (at the time) but the supermicro support was great and the engineer shared it with me before it went GA.

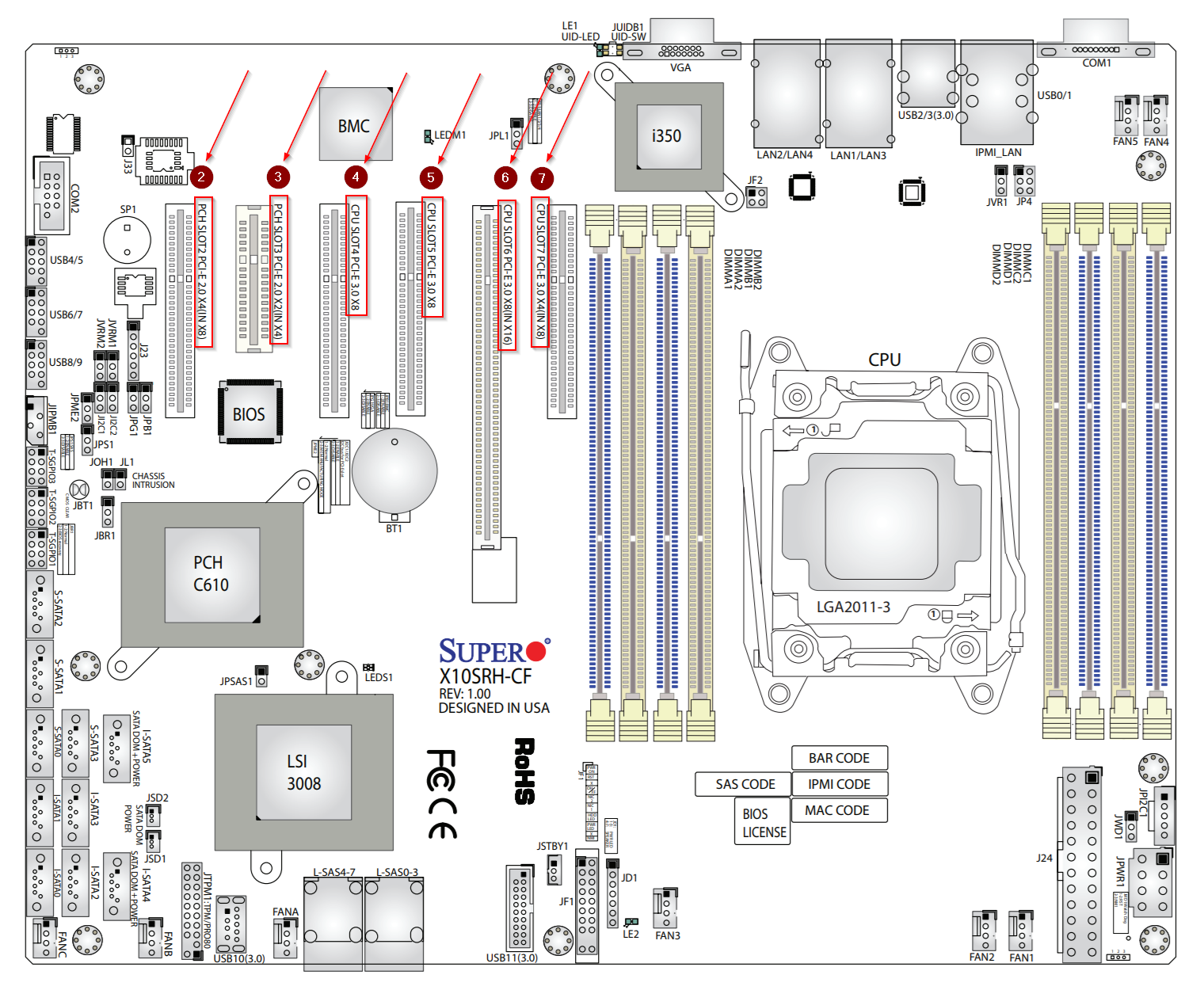

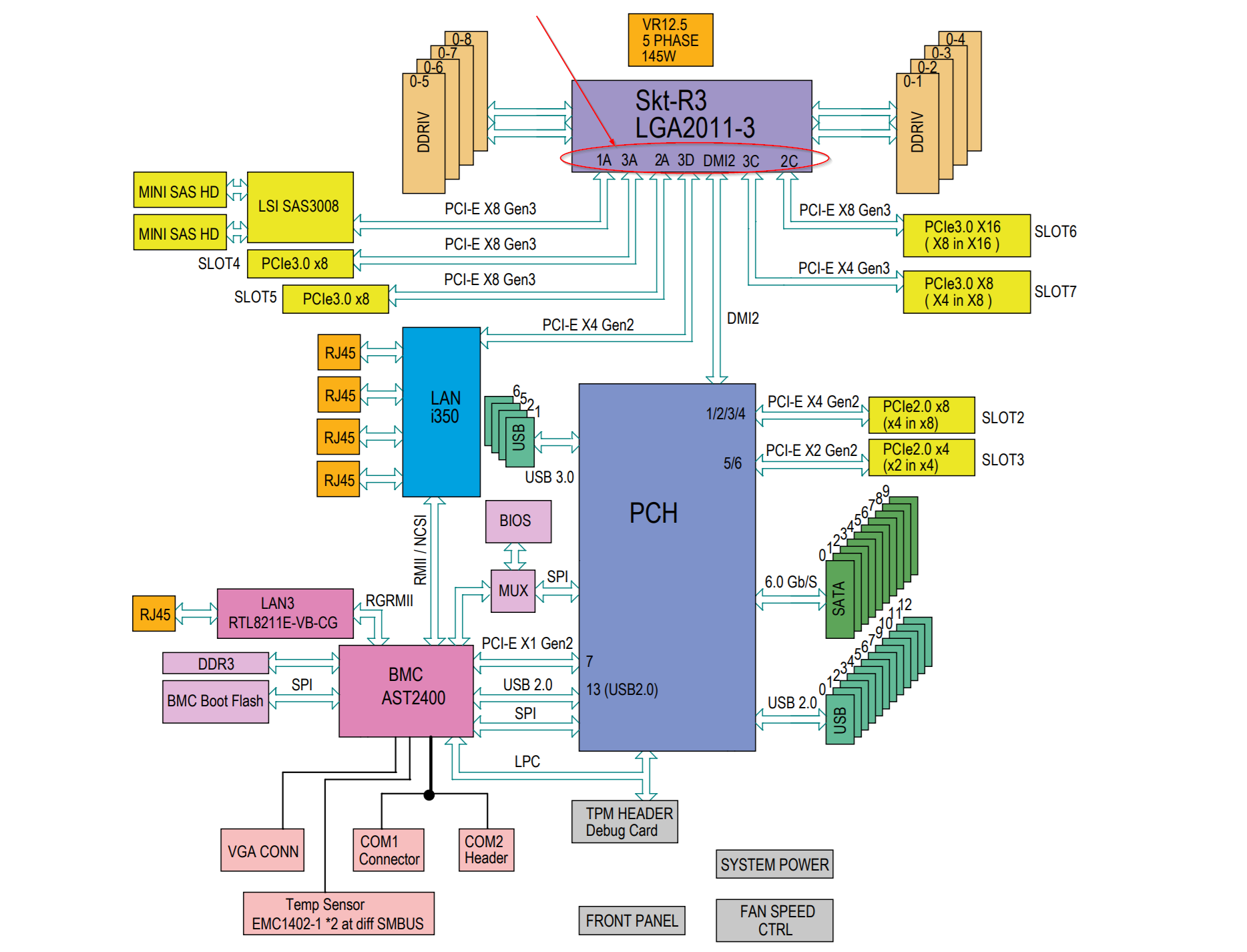

Ok, let’s take the example of my motherboard (Supermicro X10SRH-CLN4F) layout below:

It has Six(6) physical PCIe slots – labelled as slot 2,3,4,5,6 and 7 respectively. However, the CPU socket only has three(3) PCIe 3.0 links and one(1) PCIe 2.0 via DMI2/PCH (Platform Controller Hub). They are numbered as 1, 2 or 3, followed by a letter (shown in the block diagram architecture below):

Interpretation of motherboard layout and its architecture:

- CPU/PCIe Link 1: Port 1A – used for the LSI SAS3008 I/O controller

- CPU/PCIe Link 2: Port 2A, and 2C – Link 2 is PCIe 3.0 x16 which is split between slot5 and slot6 as x8 lanes each (despite the physical slot6 of x16 size).

- CPU/PCIe Link 3: Port 3A, 3C and 3D – Link 3 is also a PCIe 3.0 x16 which is split between slot4, slot7 and LAN i350 as x8, x4 and x4 lanes respectively (despite the physical slot7 of x8 size).

- DMI2 – used for slot2 and slot3 via the PCH (platform controller hub)

PCIe 2.0 x4 for slot2 (despite physical slot size of x8)

PCIe 2.0 x2 for slot3 (despite physical slot size of x4)

I created the following table for easier understanding (you could do the same for your motherboard):

|

PCIe Slot Number |

CPU/PCIe Port |

PCIe version |

PCIe Slot Size |

PCIe Lanes |

|

2 |

DMI2 |

2.0 |

x8 |

x4 |

|

3 |

DMI2 |

2.0 |

x4 |

x2 |

|

4 |

3A |

3.0 |

x8 |

x8 |

|

5 |

2A |

3.0 |

x8 |

x8 |

|

6 |

2C |

3.0 |

x16 |

x8 |

|

7 |

3C |

3.0 |

x8 |

x4 |

Limitations of the motherboard:

This motherboard restricts the use of “Quad NVMe PCIe Adapter” (not that I have a requirement for it…yet) due to the lack of dedicated PCIe x16 lane, but I can use a maximum of three(3) “Dual NVMe PCIe Adapters” because of three(3) x8 PCIe lanes available and two(2) more “Single NVMe PCIe Adapters” using the remaining two(2) x4 PCIe lanes, if needed.

Supermicro X10SRH-CLN4F motherboard has been running pretty sweet for me so far and it will suffice my current estimated requirements for future PCIe storage expansion. However, if you are in the market for buying any new motherboard and intend to run several PCIe based peripherals (including GPUs) – consider the limitations before you make the purchase.

Understand default PCIe slot behaviour with Dual NVMe PCIe adapter:

Ok, lets now talk about the “Dual NVMe PCIe adapter” e.g. Supermicro AOC-SLG3-2M2 (or any other) which requires a PCIe x8 lanes:

- If one(1) SSD is installed in the “Dual NVMe PCIe adapter” and the adapter is plugged in any PCIe slot (except slot3 which has only x2 PCIe lanes) – NVMe SSD will get detected.

- If two(2) SSDs are installed in a “Dual NVMe PCIe adapter” and the adapter is plugged in either PCIe slot2 or slot7 – only one(1) NVMe SSD will get detected.

- If two(2) SSDs are installed in a “Dual NVMe PCIe adapter” and the adapter is plugged in either PCIe slot4,5 and 6 – again only one(1) NVMe SSD will get detected.

The last option above, is the only option capable of detecting two(2) NVMe SSDs installed in a “Dual NVMe PCIe adapter” as the PCIe slots have x8 PCIe lanes available and is here, where bifurcation comes into the picture.

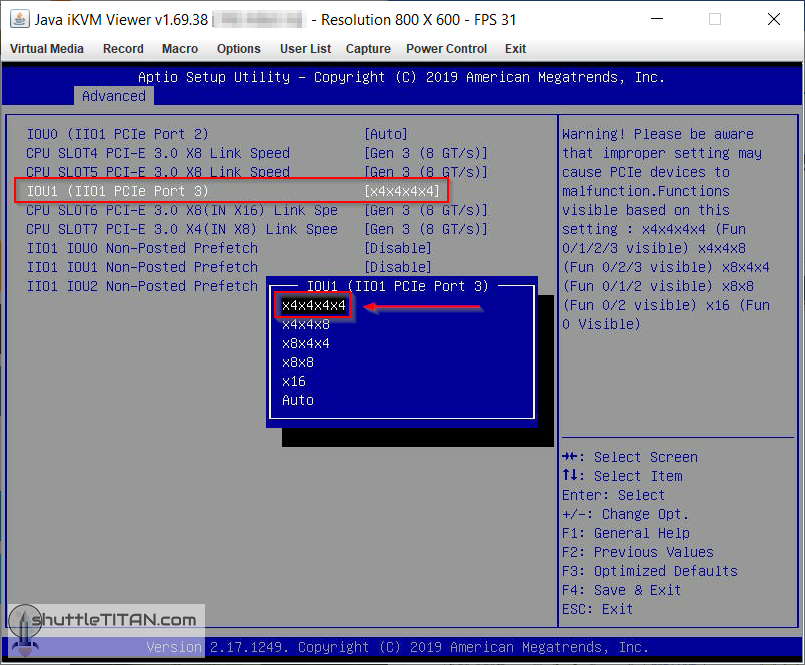

How to enable PCIe Bifurcation?

As mentioned before the motherboard should be compatible and BIOS should also have an option to enable it. You would need to dig the bifurcation options for your motherboard in BIOS settings, for Supermicro X10SRH-CLN4F (BIOS v3.2 Build 11/22/2019) the settings are located below:

BIOS -> Advanced -> Chipset Configuration -> North Bridge -> IIO Configuration -> II01 Configuration:

Optimal PCIe Bifurcation Configuration – Use case 1:

If the “Dual NVMe PCIe Adapter” is plugged into PCIe Slot4, “IOU1 (IIO1 PCIe Port 3)” config would need to be changed from “Auto” to “x4x4x4x4”, which will result in the PCIe v3.0 Link3 split/bifurcate into four(4) x4 chunks and the table will now look like:

| PCIe Slot Number | CPU/PCIe Port | PCIe version | PCIe Slot Size | PCIe Lanes |

| 2 | DMI2 | 2.0 | x8 | x4 |

| 3 | DMI2 | 2.0 | x4 | x2 |

| 4 | 3A | 3.0 | x8 | x4x4 |

| 5 | 2A | 3.0 | x8 | x4 |

| 6 | 2C | 3.0 | x16 | x8 |

| 7 | 3C | 3.0 | x8 | x4 |

Note: As I explained in the “Interpretation of motherboard layout and its architecture” section, the CPU/PCIe Link 3 has three(3) ports i.e. CPU/PCIe Port 3A, 3C and 3D. CPU/PCIe Port 3A is the only port that is affected with this config change, which now splits/bifurcates it from x8 to x4x4 and as a outcome will detect both the NVMe SSDs. The remaining CPU/PCIe Port 3C and 3D remain unaffected as they were already using x4 lanes.

Optimal PCIe Bifurcation Configuration – Use case 2:

If the “Dual NVMe PCIe Adapter” is plugged into PCIe slot5, “IOU1 (IIO1 PCIe Port 2)” config would need to be changed from “Auto” to “x4x4x4x4” instead, which will result in the PCIe v3.0 Link2 split into four(4) x4 chunks and the table will then look like this:

| PCIe Slot Number | CPU/PCIe Port | PCIe version | PCIe Slot Size | PCIe Lanes |

| 2 | DMI2 | 2.0 | x8 | x4 |

| 3 | DMI2 | 2.0 | x4 | x2 |

| 4 | 3A | 3.0 | x8 | x8 |

| 5 | 2A | 3.0 | x8 | x4x4 |

| 6 | 2C | 3.0 | x16 | x4x4 |

| 7 | 3C | 3.0 | x8 | x4 |

Optimal PCIe Bifurcation Configuration – Use case 3 (my use case):

If you have a GPU (which I do i.e. Nvidia 1080Ti installed in PCIe slot6) along with the multiple PCIe based NVMe SSDs, the optimal configuration to get peak performance from the PCIe slot6 i.e. all x8 lanes and successfully detecting two(2) NVMe SSDs in PCIe slot 5 would be, to change “IOU1 (IIO1 PCIe Port 2)” config from “Auto” to “x4x4x8” , and to detect another set of two(2) NVMe SSDs in PCIe slot4, “IOU1 (IIO1 PCIe Port 3)” would also need to be changed from “Auto” to “x4x4x4x4”. The table will now look like:

| PCIe Slot Number | CPU/PCIe Port | PCIe version | PCIe Slot Size | PCIe Lanes | Peripherals attached |

| 2 | DMI2 | 2.0 | x8 | x4 | One(1) NVMe SSD |

| 3 | DMI2 | 2.0 | x4 | x2 | Quad Port NIC Card |

| 4 | 3A | 3.0 | x8 | x4x4 | Two(2) NVMe SSD |

| 5 | 2A | 3.0 | x8 | x4x4 | Two(2) NVMe SSD |

| 6 | 2C | 3.0 | x16 | x8 | Nvidia 1080Ti |

| 7 | 3C | 3.0 | x8 | x4 | One(1) NVMe SSD |

I have plans to install three(3) more NVMe SSDs in the next couple of weeks i.e. two(2) x 1TB to replace my failed 2TB HDD and another 2TB for future prospects (possibly all three of 2TB sizes if there are any deals on the upcoming Amazon Prime Day this months

Hope this helps in making your purchase decision or helps understand your existing motherboard architecture’s and its PCIe bifurcation configurations.

Hello and thank you for this article.

As I was reading, when I came upon the section “Understand default PCIe slot behaviour with Dual NVMe PCIe adapter”

Arriving at the text which starts with: Point “C” is the only option capable of detecting two…

For clarity’s sake I would suggest you replace “Point “C”” by either “Situation 3” or “Scenario 3”.

Your hopes of having this article helping someone have concretized with this reader here 😉

Thank you for sharing your experience and knowledge,

JB

Thanks for the feedback James – suggestions are always welcome 🙂

I have amended the wording to begin with “The last option above, is the only option capable of…”.

Thanks for reading.

Thanks for this! I have only today heard of bifurication for PCIe and your article seems to cover everything there is to know to be able to handle a PCIe NVMe extension card and that in a very precise and easy to understand way.

Nice work!

Very interesting I hope it works with my next

SuperMicro Cse X10SLH-N6 1U E3-1200 v3, 6x 10GbE

The idea is to make it a switch+NAS with 4Sata 3.5 HDD 1o2 SATA SSD and 2/4 NWMe M2 but I’ve left open the possibility of it serving as a switch firewall router without NAS we’ll see in the days following delivery … It’s coming

I will share that

Knowledge increases by sharing it… the knowledge Economy is one of the few that increases capital in a distributed way without damaging anyone!

It’s probably worth mentioning that PCIe ports using DMI are usually slower than PCIe ports using the CPU directly since they don’t have a direct connection to the CPU, rather they go through the chipset instead.

Interesting to know that it’s useful to change “Auto” to x4x4x4x4 in the bios.

Nice job.

I have an Amd Ryzen 7 3700X with a motherboard Asrock X570 Phantom gaming 4 with only Hyper M.2 slots and one slot for Wifi but there is 8 sata and 48 Mb ram (+- 2100). I had an Asus HYPER M.2 X16 GEN 4 CARD for 4 M.2 Nvme but it’s OK for only one slot. I should change to x4x4x4x4 in my bios. Another idea was to use the slot for M.2 Wifi for something else with an adaptator but i think it’s a only a M.2 E. What do you think ?

Best,

PM