It’s been a while since I talked about the NSX-T architecture in my previous blog here, which unfortunately is now only applicable to NSX-T v2.3 and earlier. Fortunately, with NSX-T v2.4 and later, VMware introduced an architectural change that simplified the overall management, installation and upgrades; brought high availability, scalability along with flexible deployment options.

I opted to split the new architecture into two parts, for easier understanding:

- NSX-T Architecture (Revamped): Part 1 (this blog) – talks about the new revamp NSX-T architecture and its components.

- NSX-T Architecture (Revamped): Part 2 – talks about the NSX-T Agent/service communication between the NSX-T Manager Nodes and Transport Nodes.

NSX-T v2.4 was one of the major release version in the NSX-T history which had variety of new features, new functionality and security for private, public and hybrid clouds – for more information check out the VMware release notes here. NSX-T v2.5 was also a leap ahead with many enhancements to the features, specifically with the migration coordinator for migrating from NSX Data Center for vSphere to NSX-T Data Center, for more information check out the VMware release notes here.

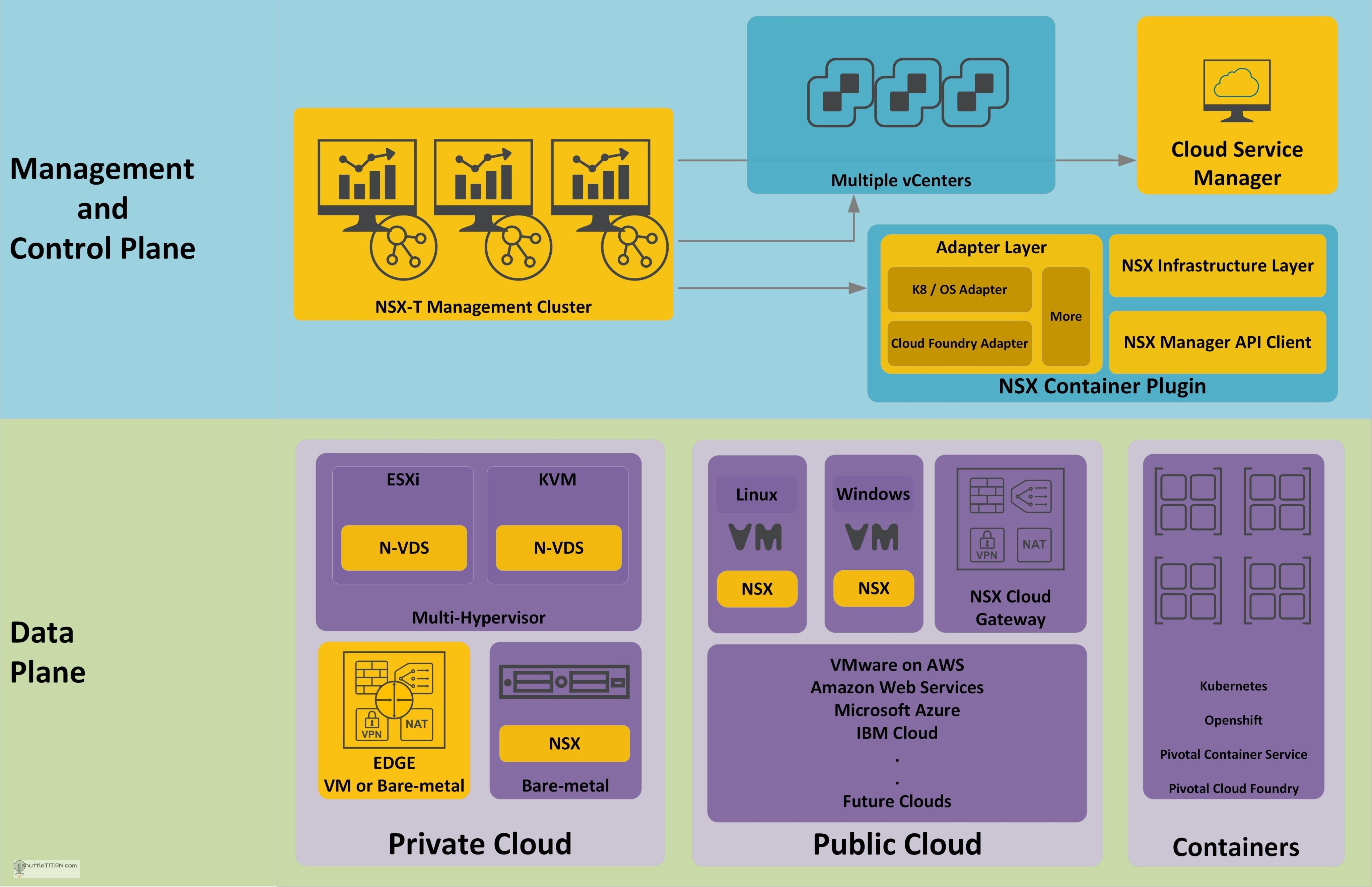

Along with the introducing many new features, a major change in the architecture of NSX-T v2.4 (and later) was brought to the table i.e. consolidation of the Management and Control Plane in a single appliance supporting clustering and scalable to three nodes. Similar to the previous deployment of controller nodes, it now supports high availability for Management plane i.e. the user interface and API consumption:

We will not do a deep dive on every individual component of NSX-T (perhaps in separate upcoming blogs in the future 😊) but we will touch bases and cover all aspects briefly, to help make sense of what and why they are used.

Management Plane:

The Management plane provides the entry point to NSX-T Datacenter, to perform operational tasks such as configuration and monitoring of all management, control, and data plane components. The changes are made using either by NSX-T Data Center UI or RESTful API. The Management plane is further segregated into two roles: Policy and Management Role.

Control Plane:

The control plane is an advanced distributed state management system that provides control plane functions for logical switching, routing functions along with propagating the distributed firewall rules. The Control plane is also further segregated into two parts the Central Control Plane (CCP) and Local Control Plane (LCP).

NSX-T Management Cluster – As mentioned earlier in the blog and illustrated in the image above, both Management and Control planes are consolidated into a single appliance, scalable to three node cluster, providing high availability to all services including GUI and API.

Cloud Service Manager (CSM) provides a complete view of single pane of glass management endpoint for all your public clouds. CSM is a virtual appliance that provides the UI and REST APIs for onboarding, configuring, and monitoring your public cloud inventory.

NSX Container plugin (NCP) is a container pod deployed when using container-based applications. It provides integration between NSX-T and container orchestrators such as Kubernetes, as well as integration between NSX-T and container-based PaaS (platform as a service) products such as OpenShift and Pivotal Cloud Foundry.

NCP monitors changes to containers and other resources. It also manages networking resources such as logical ports, switches, routers, and security groups for the containers through the NSX API.

Adapter layer: NCP is built in a modular manner so that individual adapters can be added for a variety of CaaS and PaaS platforms.

NSX Infrastructure layer: Implements the logic that creates topologies, attaches logical ports, etc.

NSX API Client: Implements a standardized interface to the NSX API

Data Plane:

The Data plane performs stateless packet forwarding, following the tables populated by the control plane and maintains statistics to report back to the NSX-T Manager. Many heterogeneous systems can now be part of the Data plane of NSX-T, lets discuss them briefly below:

Private Cloud:

Multi-Hypervisor: NSX-T is designed not just for ESXi but also with other Hypervisors based on KVM. Following are the Hypervisors supported by NSX-T v2.3 release:

| Hypervisor | Version (NSX-T v2.5) | Version (NSX-T v2.4) | CPU Cores | Memory |

|---|---|---|---|---|

| vSphere | v6.5 U2 minimum | v6.5 U2 (minimum ESXi 6.5 P03), v6.7 U1 (ESXi 6.7 EP06), v6.7 U2 | 4 | 16 Gb |

| RHEL KVM | 7.6, 7.5 and 7.4 | 7.6, 7.5 and 7.4 | 4 | 16 Gb |

| SUSE Linux Enterprise Server KVM | 12 SP3, SP4 | 12 SP3, SP4 | 4 | 16 Gb |

| Ubuntu KVM | 18.04.2 LTS* | 18.04.2 LTS, 16.04.2 LTS | 4 | 16 Gb |

* In NSX-T Data Center v2.5, hosts running Ubuntu 18.04.2 LTS must be upgraded from 16.04. Fresh installs are not supported.

Note: Please refer the release notes of the NSX-T version, you are going to deploy to confirm the compatibility of the hypervisor version supported.

N-VDS: The module N-VDS is an NSX-T Virtual Distributed Switch. As NSX-T is not limited to vSphere environments, developing a new virtual switch from scratch was probable to cover all heterogeneous systems. N-VDS delivers network services to the virtual machines running on the hypervisors. Implementation of N-VDS on KVM hypervisors is founded by open vSwitch (OVS) which is platform independent.

Bare-metal Servers: Starting with NSX-T v2.3, VMware introduced support for Linux based workloads as well as containers running on bare-metal servers which is carried over to the current versions. Following are the Operating Systems supports by NSX-T v2.5 and 2.4 release:

| Operating System | Version (NSX-T v2.5) | Version (NSX-T v2.4) | CPU Cores | Memory |

|---|---|---|---|---|

| RHEL | 7.5 and 7.4 | 7.5 and 7.4 | 4 | 16 Gb |

| SUSE Linux Enterprise Server | n/a | 12 SP3 | 4 | 16 Gb |

| Ubuntu | 18.04.2 LTS* | 18.04.2 LTS, 16.04.2 LTS | 4 | 16 Gb |

| CentOS | 7.5 and 7.4 | 7.4 | 4 | 16 Gb |

* In NSX-T Data Center v2.5, hosts running Ubuntu 18.04.2 LTS must be upgraded from 16.04. Fresh installs are not supported

Note: Please refer the release notes of the NSX-T version, you are going to deploy to confirm the compatibility of the bare-metal server’s perating system version supported.

EDGE:

NSX Edge nodes are service appliances dedicated to running centralised services i.e. Load Balancing, NAT, Edge Firewall, VPN, DHCP, Connectivity to the physical network. They are grouped in one or several clusters, representing a pool of capacity. NSX Edge node can be deployed as VM appliance on ESXi OR on a bare-metal server but it is not supported on KVM based hypervisors up as of NSX-T v2.5 release.

Note: Edge node deployment on bare-metal servers have limited “Intel” CPU support and specific NIC card requirements. Therefore, I would recommend referring the compatibility of the NSX-T version on VMware HCL, before deployment.

Public Cloud

NSX-T also expands the scope to the Public Clouds, it does that by the deployment of Public Cloud Gateways (PCG) via the Cloud Service Manager (CSM). What it provides – Visibility, consistent network and security policy and end to end operational control via a single pane of glass.

Example – If you have NSX-T deployed within your Datacenter you can seamless extend the features to public cloud that you manage. Having said that, it does not mean you need to have NSX-T deployed and running in your Datacenter first, you could start with NSX on cloud and do a reverse journey to your Datacenter.

NSX Cloud Gateways provides a localized NSX control plane in each VPC/VNET and is responsible for pushing policies down to each public cloud instance.

An NSX Agent is pushed to the VMs running in public clouds and is mandatory for NSX-T v 2.4. However, NSX-T v2.5 introduces an agentless option for policy enforcement which translates NSX policies to native cloud specific security Policies (subject to Cloud provider limits – scale, feature, etc.)

NSX-T v2.5 release supports VMware on AWS, AWS, Azure and IBM Cloud so far however the idea is to add more clouds in the future, to keep in line with the preposition of “NSX Everywhere.”

Containers

Last but not the least, NSX-T also provides the network and security to the Docker based containers which is one of the main use cases for implementing NSX-T over NSX-V.

NSX-T provides a common framework to manage and increase visibility of environments that contain both VMs and containers.

The NSX-T Container Plug-in (NCP), mentioned in the management plane provides direct integration with several environments where container-based applications exist. Container orchestrators (sometimes referred to as CaaS), such as Kubernetes (k8s) are perfect models for NSX-T integration. Solutions that contain enterprise distributions of k8s e.g. RedHat Open Shift, Pivotal Container Service support solutions with NSX-T. Additionally, NSX-T supports integration with PaaS solutions like Pivotal Cloud Foundry.

Compatibility Requirements for a Kubernetes Environment for NSX-T v2.4.1 and v2.5 are as follows:

| Software Product | Version |

|---|---|

| Hypervisor for Container Host VMs | vSphere (for NSX-T v2.5) – v6.5 U2 minimum vSphere (for NSX-T v2.4.1) – v6.5 U2 (minimum ESXi 6.5 P03), v6.7 U1 (ESXi 6.7 EP06), v6.7 U2 RHEL KVM 7.5, 7.6 Ubuntu KVM 16.04, 18.04 |

| Container Host Operating System | RHEL 7.5, 7.6 Ubuntu 16.04, 18.04 CentOS 7.6 |

| Container Orchestrator | Kubernetes 1.13, 1.14 |

| Container Host Open vSwitch | 2.10.2 (packaged with NSX-T Data Center v2.4.1) |

I hope that was informative, let’s talk about the “NSX Agents/services” that work behind the scenes for communication between NSX-T manager nodes and transport nodes in NSX-T Architecture (Revamped): Part 2