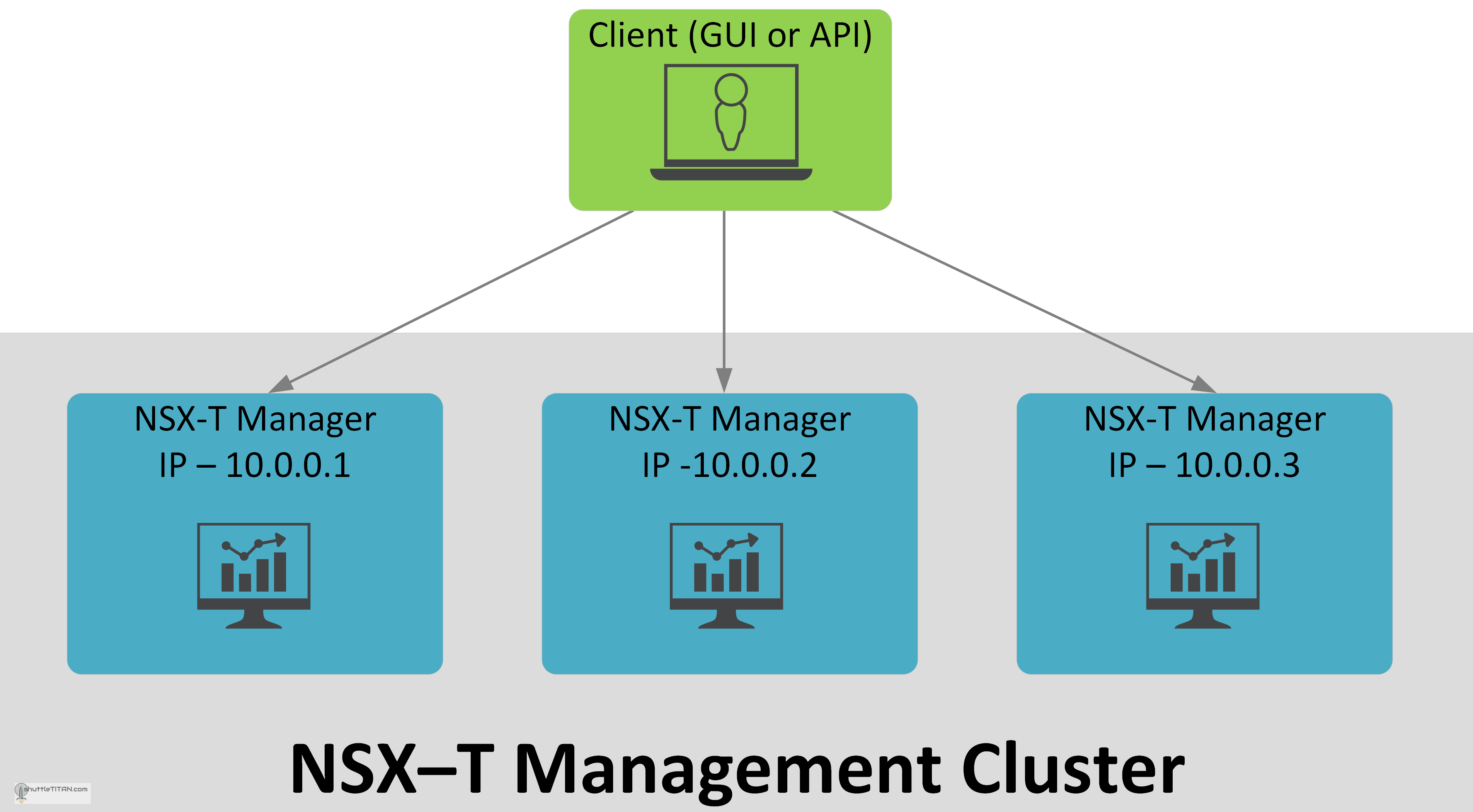

As discussed in one of my previous blog NSX-T Architecture (Revamped): Part 1, the release of NSX-T v2.4 brought simplicity, flexibility and scalability for the management plane. If you are not familiar with the architecture of the NSX-T, I would highly recommend checking out both parts of the architecture blogs, along with the NSX-T Management Cluster: Benefits, Roles, CCP Sharding and failure handling.

As I like to split some topics of discussion, this topic is another one divided in two parts, for easier understanding and context:

- NSX-T Management Cluster Deployment: Part 1 (this blog) – shares the general requirements which are common across the deployment options discussed in Part 2.

- NSX-T Management Cluster Deployment: Part 2 – uncovers the deployment options and their relevant use cases.

At first, I thought about writing one blog relevant to NSX-T Management Cluster deployment options, but there were quite a few requirements that were common across the deployment options, which led me to split this topic in two parts. This Blog is Part 1 and talks about general requirements of NSX-T Management Cluster:

- NSX-T Manager deployment is hypervisor agnostic and is supported on vSphere and KVM based hypervisors:

| Hypervisor | Version (for NSX-T v2.5) | Version (for NSX-T v2.4) | CPU Cores | Memory |

|---|---|---|---|---|

| vSphere | vCenter v6.5 U2d (and later), ESXi v6.5 P03 (or later) vCenter 6.7U1b (or later), ESXi 6.7 EP06 (or later) | vCenter v6.5 U2d (and later), ESXi v6.5 P03 (or later) vCenter 6.7U1b (or later), ESXi 6.7 EP06 (or later) | 4 | 16 |

| RHEL KVM | 7.6, 7.5, and 7.4 | 7.6, 7.5, and 7.4 | 4 | 16 |

| Centos KVM | 7.5, 7.4 | 7.4 | 4 | 16 |

| SUSE Linux Enterprise Server KVM | 12 SP3 | 12 SP3, SP4 | 4 | 16 |

| Ubuntu KVM | 18.04.2 LTS* | 18.04 and 16.04.2 LTS | 4 | 16 |

* In NSX-T Data Center v2.5, hosts running Ubuntu 18.04.2 LTS must be upgraded from 16.04. Fresh installs are not supported.

- The maximum network latency between NSX Manager Nodes in the cluster should be 10ms.

- The maximum network latency between NSX Manager Nodes and Transport Nodes should 150ms

- NSX Manager must have a static IP address and you cannot change the IP address after installation.

- The maximum disk access latency should be 10ms

- When installing NSX Manager, specify a hostname that does not contain invalid characters such as an underscore or special characters such as dot “.”. If the hostname contains any invalid character or special characters, after deployment the hostname is set to nsx-manager.

- The NSX Manager VM running on ESXi has VMTools installed – Remove or upgrade of VMTools is not recommended

- Verify that you have the IP address and gateway, DNS server IP addresses, domain search list, and the NTP server IP address for the NSX Manager to use.

- Appropriate Privileges to deploy OVF Template

- The Client Integration Plug-in must be installed.

- NSX Manager VM Resource requirements:

| Appliance Size | Memory | vCPU | Disk Space | VM Hardware Version | Support |

|---|---|---|---|---|---|

| Extra Small | 8 | 2 | 200 Gb | 10 or later | “Cloud Service Manager” role only |

| Small | 16 | 4 | 200 Gb | 10 or later | Proof of Concepts or Lab |

| Medium | 24 | 6 | 200 Gb | 10 or later | Upto 64 Hosts |

| Large | 48 | 12 | 200 Gb | 10 or later | Upto 1024 Hosts |

For further information on maximum configuration details please see the link here

General Best Practices:

- The NSX-T Manager VMs are deployed on shared storage

- DRS Anti-affinity rules configured to manage NSX-T Manager VMs across hosts

- When deploying NSX-T service VMs as a high-availability pair, enable DRS to ensure that vMotion will function properly.

This completes the Part 1 i.e. general requirements of the NSX-T Management Cluster Deployment, lets discuss the deployment options and their relevant use cases in Part 2.