As the title alludes, “NSX-T Management Cluster Deployment” is yet another topic split in two parts for easier understanding and context:

- NSX-T Management Cluster Deployment: Part 1 – discusses the general requirements which are common across the deployment options discussed in Part 2.

- NSX-T Management Cluster Deployment: Part 2 (this blog) – uncovers the deployment options and their relevant use cases.

I would highly encourage you to go through and understand the Part 1 of this topic of discussion, that shares the common requirements of all three deployment options discussed in this blog (Part 2), missing any one can cause unpredictable results or issues.

VMware supports only a “three node” NSX-T Management Cluster for production environments however a single NSX-T Manager can also be deployed for Proof of Concepts and Labs which is not discussed in this blog. Three deployments options available for the NSX-T Management Cluster introduced with NSX-T v2.4 are as follows:

- NSX-T Management Cluster – Default Mode.

- NSX-T Management Cluster with Internal Virtual IP.

- NSX-T Management Cluster with external Load Balancer Virtual IP.

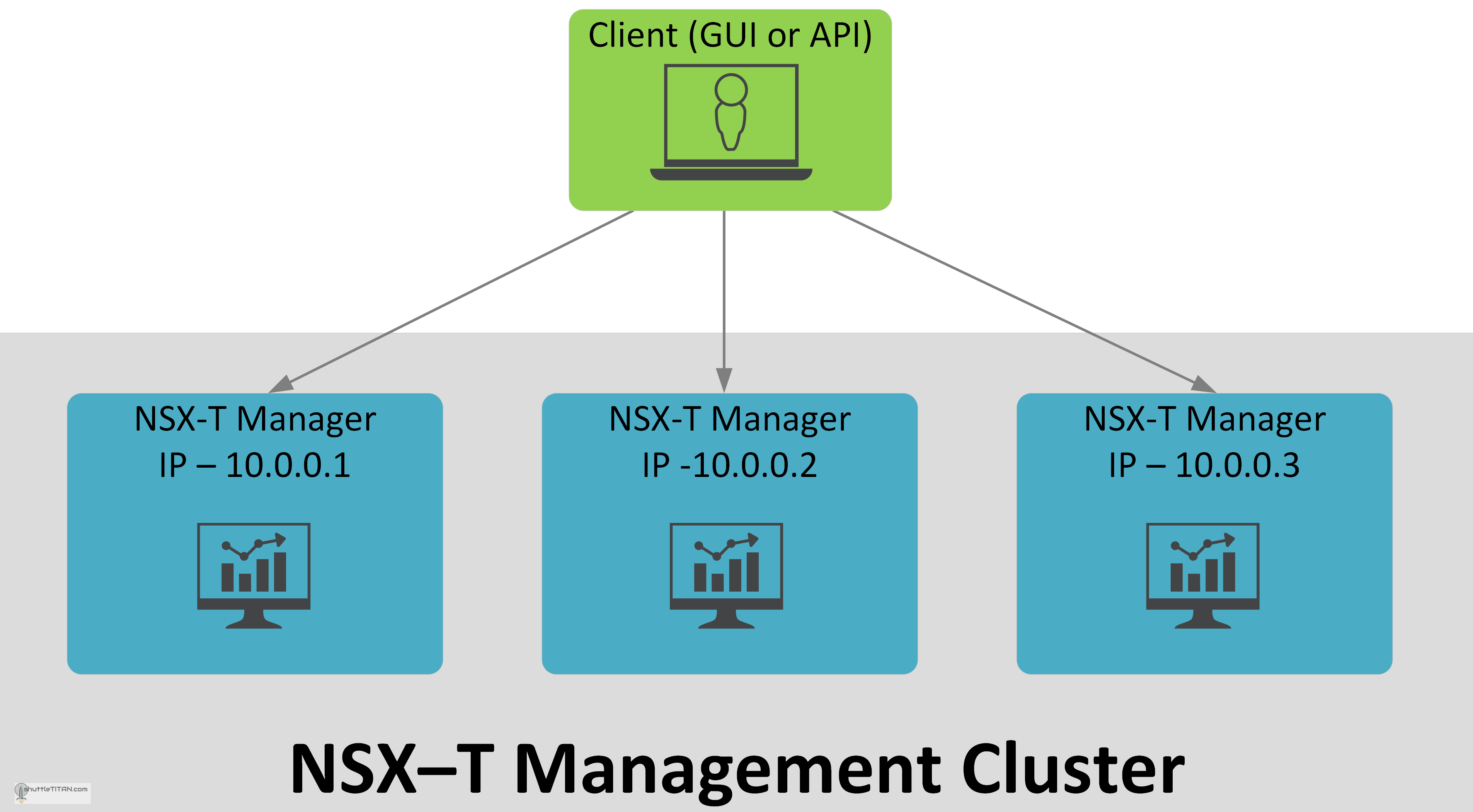

Option 1: NSX-T Management Cluster – Default Mode

The above image illustrates the NSX-T Management Cluster “default mode” after the three nodes are deployed or upgraded from NSX-T v2.3. The key points are mentioned below:

- No Layer 2 adjacent requirements.

- All three NSX-T Managers can individually be used for GUI and API access.

- Upon a failure of one NSX-T manager, the connection will be dropped but the users can use one of the remaining NSX-T managers for GUI and API access.

- At least two NSX-T Managers should be available to provide functionality.

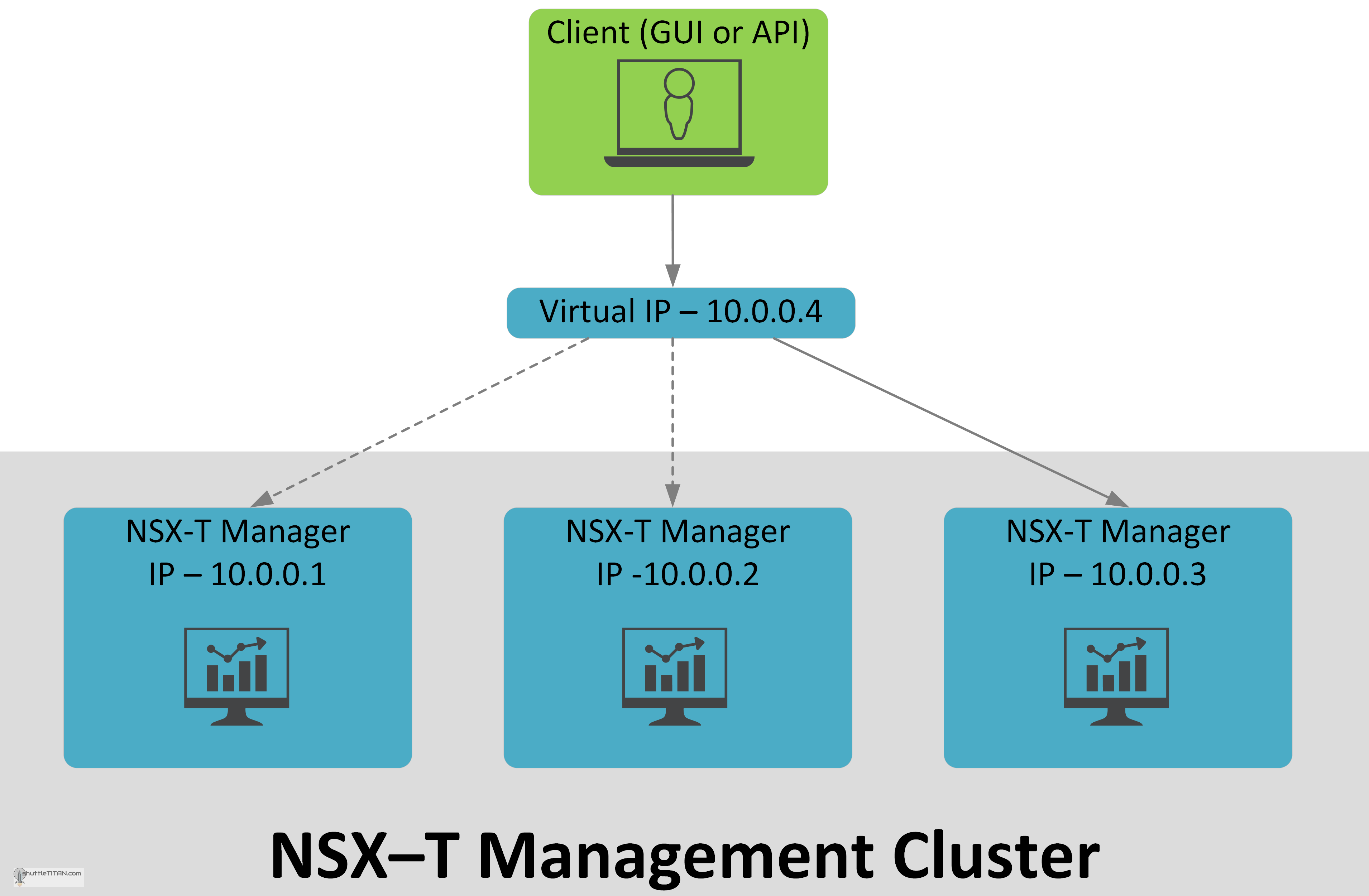

Option 2: NSX-T Management Cluster with Virtual IP:

This option is available, with the use of Virtual IP configuration available within the NSX-T Manager. The Key points are mentioned below:

- All NSX-T Manager nodes must be in the same subnet.

- One NSX-T Manager node is elected as a leader on which the cluster Virtual IP is attached.

- All three NSX-T Managers can still be individually used for GUI and API access.

- Upon the failure of the leader node, NSX-T elects a new leader and it sends the Gratuitous Address Resolution Protocol (GARP) request to assume ownership of the Virtual IP. In this scenario all live connection(s) from the cluster’s Virtual IP are dropped and new connections need to be established via the new leader node for GUI and API access.

- At least two NSX-T Managers should be available to provide functionality.

Benefits over default mode:

- Allows users to access NSX-T Manager using a single IP.

- Node IP changes does not impact the accessibility via GUI or API.

Cluster Virtual IP functions:

- Is only used for northbound i.e. GUI and API access however all southbound communication is done via the NSX-T Manager node specific IP.

- Does not perform load balancing and only forwards the request to the leader node

- Does not detect all services failure.

- Does not failover existing sessions, the clients need to re-authenticate and establish a new connection.

Use Cases:

- Two Independent Sites (each site having their own vCenter Server): All NSX-T Manager nodes running on primary site, manual recovery of NSX-T Manager by use of backups on secondary sites.

- Dual Site, Single Stretched Metro Cluster (One vCenter for both Sites): All NSX-T Managers running on primary site, automatic recovery of NSX-T Managers dependant on vSphere HA – supported from NSX-t v2.5 onwards (NSX-T Management vLAN must be stretched and T0 must be in Active/Standby configuration).

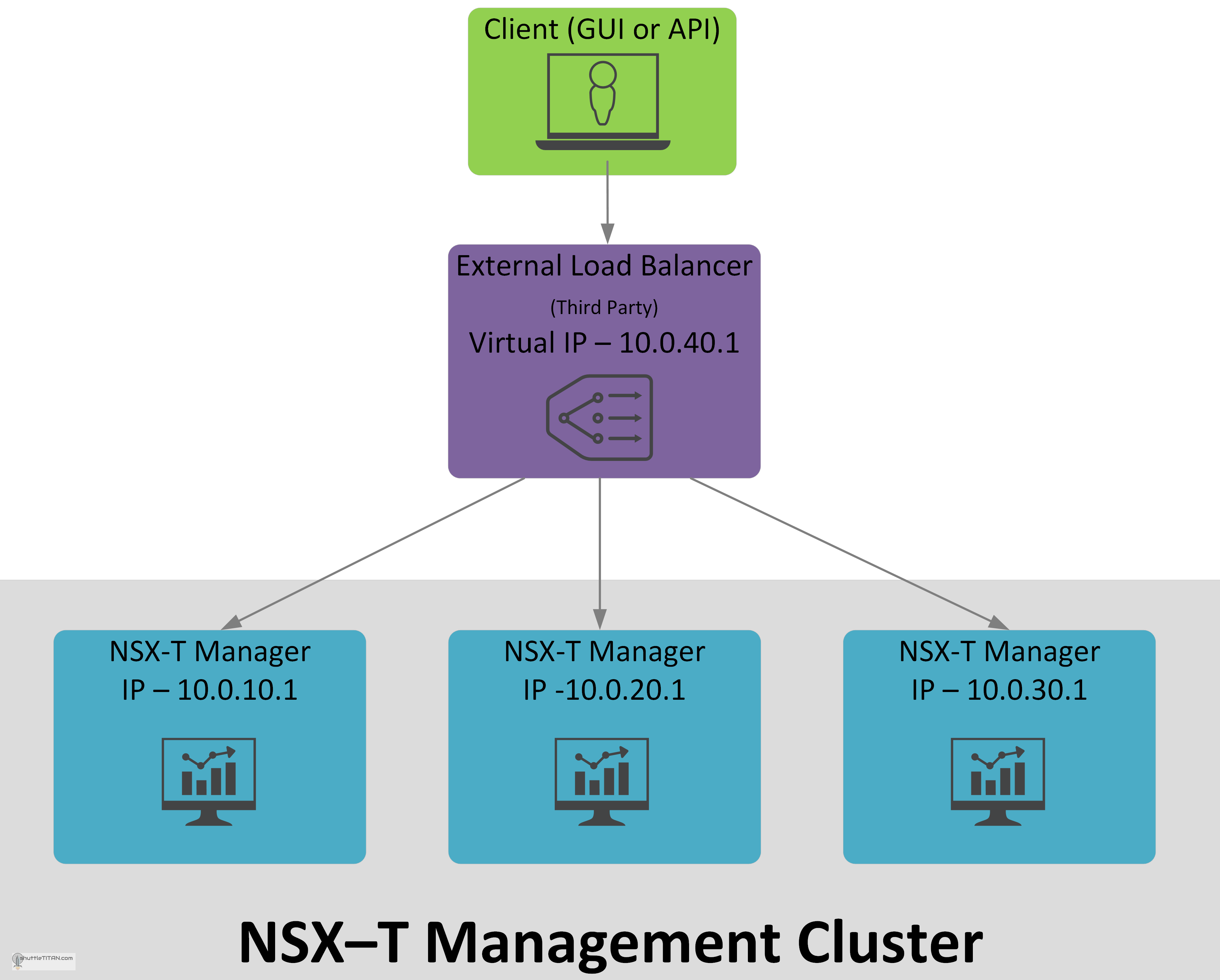

Option 3: NSX-T Management Cluster with External Load Balancer

This option uses an External Load balancer, which can pass on HTTPS requests to the NSX-T Manager nodes. The key points are below:

- All NSX-T Manager nodes can be on different subnets.

- External Load Balancer Virtual IP can load balance to all three NSX-T Manager nodes.

- All three NSX-T Managers can still be individually used for GUI and API access.

- At least two NSX-T Managers should be available to provide functionality.

Benefits over Option 2 i.e. NSX-T Management Cluster with Virtual IP:

- All NSX Managers are active.

- Load is balanced as per the algorithm used.

- No Restrictions on nodes being in the same subnet.

Use Cases:

- Two Independent Sites (each site having their own vCenter Server): All NSX-T Managers running on primary site, manual recovery of NSX-T Manager by use of backups on secondary sites.

- Dual Site, Single Stretched Metro Cluster (One vCenter for both Sites): All NSX-T Managers running on primary site, automatic recovery of NSX-T Managers dependant on vSphere HA – supported from NSX-t v2.5 onwards. (NSX-T Management vLAN must be stretched and T0 must be in Active/Standby configuration).

- Three Independent Sites (each site having their own vCenter Server): One NSX-T Manager node placed in each site.

- NSX-T Manager nodes placed in different racks requiring different IPs.

I hope this was informative, next – I will be doing a step-by-step NSX-T Deployment soon 😊

Thank you for the information. I’ve been trying to find documentation supporting NSX management nodes on 3 subnets and option 3 shows exactly what I’m trying to do. We are deploying a stretched VSAN/NSX cluster across 2 on site data centers with full VLAN separation. vSAN witness and one of the NSX mgmt nodes reside in a third location on campus. THANKS!