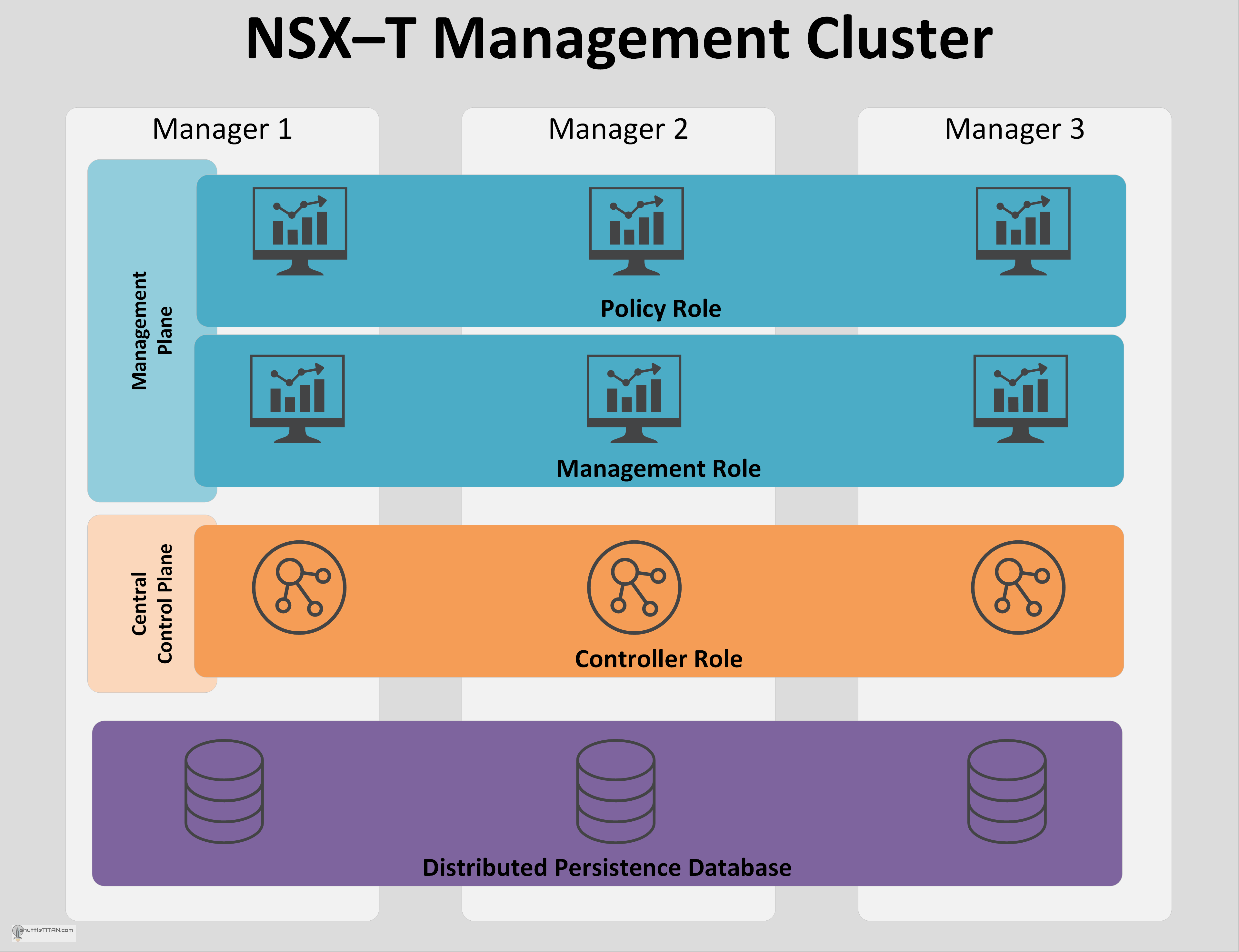

In versions prior to NSX-T v2.4, four appliances were deployed based on roles i.e. one management appliance and three controller appliances, that were to be managed for NSX. From v2.4 – The NSX manager, NSX policy and NSX controller role were consolidated in a single unified appliance which can now be scaled to a three-node cluster for redundancy and high availability. The desired stated is replicated in the distributed persistence database, all configuration or operations can be performed on any appliance:

Benefits of having a unified appliance cluster:

- Less Management Overhead with reduced appliances.

- Potential reduction in the compute resources required.

- Simplified Installation and Upgrades.

- High availability and scalability of all services, including UI and API consumption.

NSX Manager Node performs several functions and essentially there are three roles, besides the Distributed Persistence Database:

Management Plane

Policy Role:

Provides Network and Security Configuration across the environment and is addressed from the new “Simplified UI” for achieving the desired state of the system.

NSX Manager Role is responsible for:

- Receiving and validating the configuration from the NSX Policy (Role).

- Stores the configuration in the distributed persistent database (CorfuDB).

- Publishes the configuration to the Central Control Plane.

- Installs, manages and monitors the data plane components.

Central Control Plane

The CCP (Central Control Plane) maintains the realized state of the system and is responsible for:

- Providing control plane functions i.e. Logical switching, routing and distributed firewall.

- Computes all ephemeral runtime states received from the management plane.

- Pushes stateless configuration to the Local Control Plane (LCP) daemons running on Transport node which in turn propagates down to the forwarding engines.

For more information on NSX Agent/services communication, please refer my other blog NSX-T Architecture (Revamped): Part 2

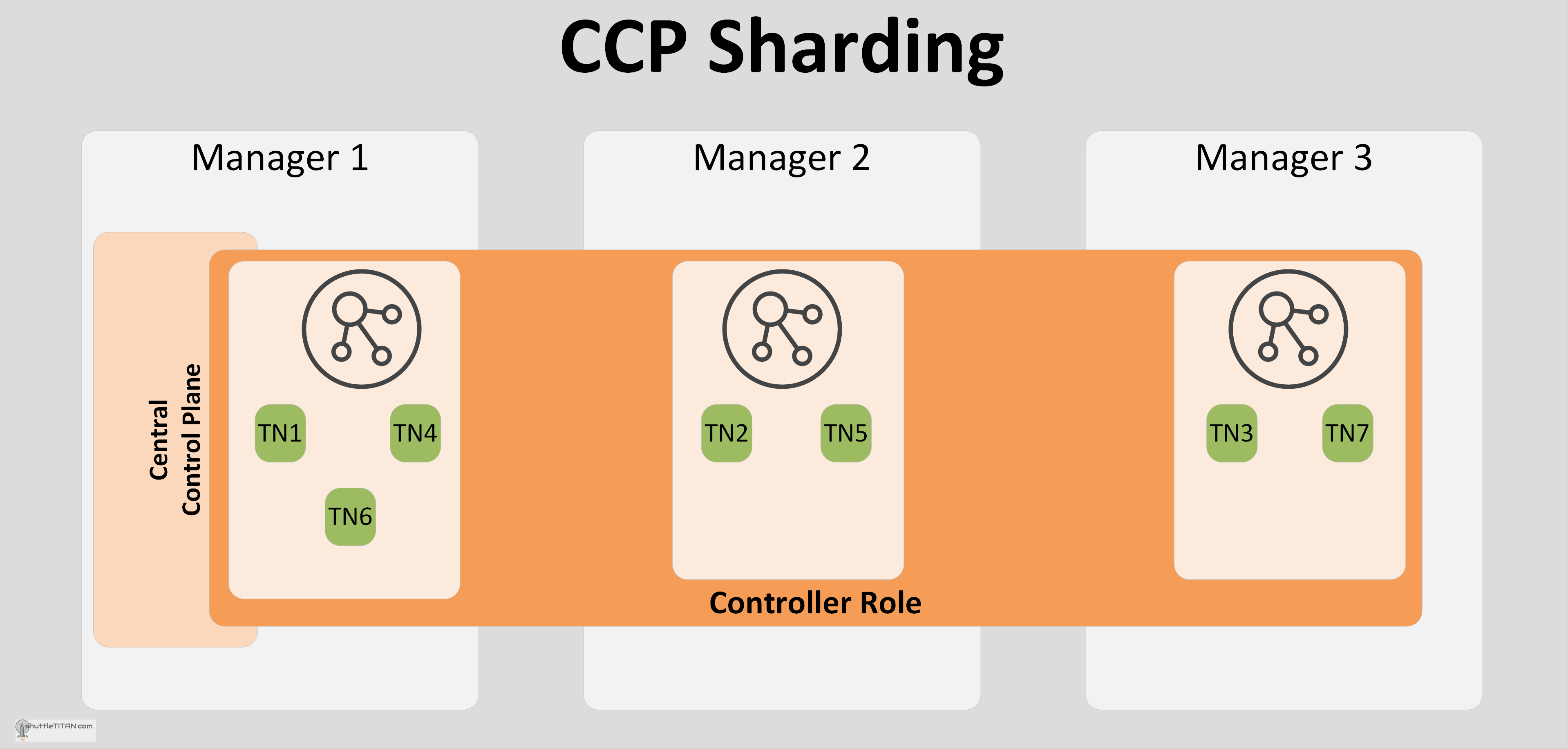

Centra Control Plane (CCP) Sharding

The CCP uses a sharding mechanism to distribute the load between the controller nodes i.e. each Transport node is assigned to a single controller node for L2, L3 and distributed firewall configuration. However, each controller node receives configuration updates from the management and data planes irrespectively, but only applicable information is maintained, as illustrated in the image below:

Note: The “TN1, TN2, TN3, etc.” in the Image above are the Transport Nodes.

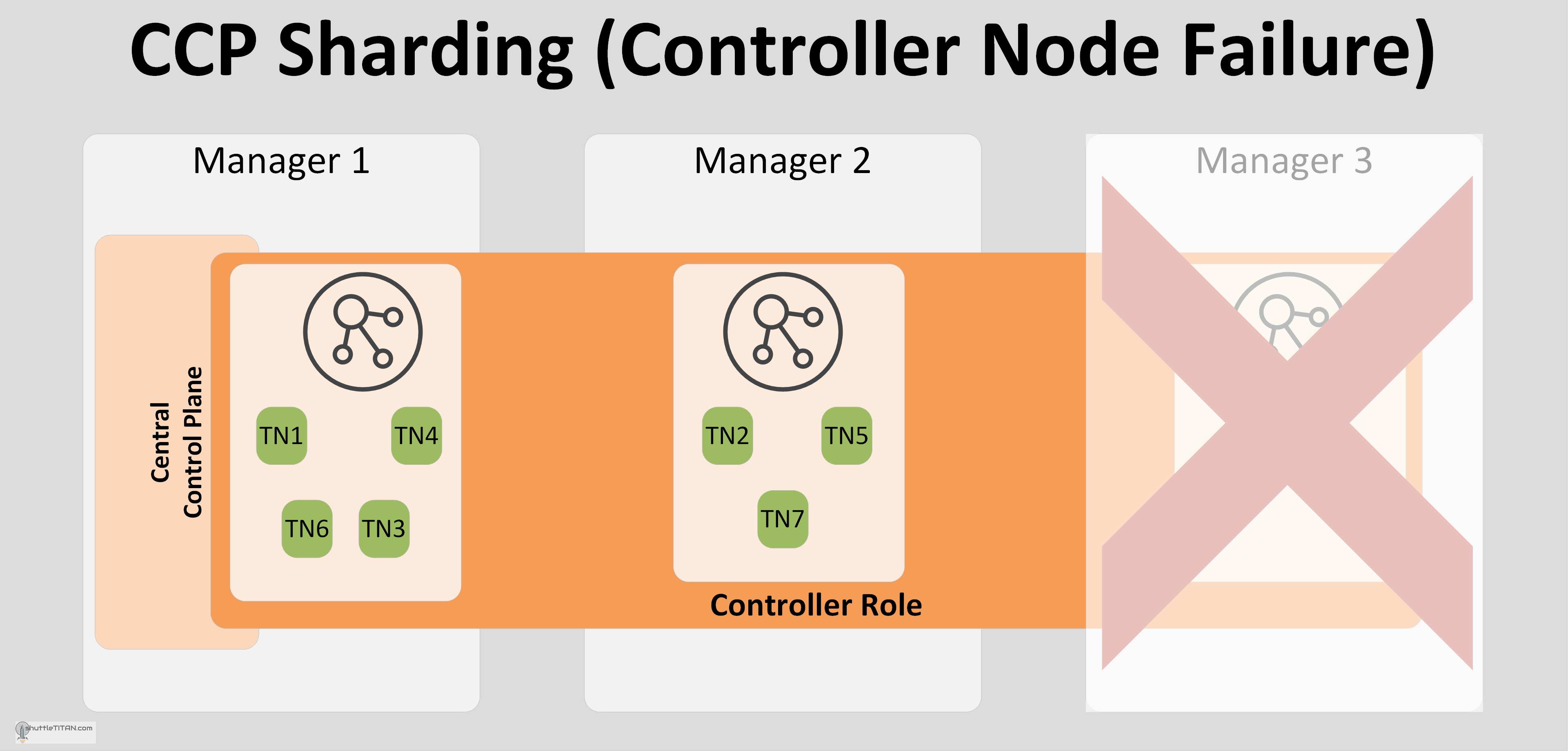

The Transport Node to Controller node mapping is done via an internal hashing algorithm. When a controller node fails, the sharding table is re-calculated and the load is re-distributed between the remaining controllers, the data plane traffic continues to operate without disruption as illustrated in the image below:

The Transport Node TN3 and TN7 are now distributed to Manager 1 and Manager 2 controller plane respectively.

I hope this was informative, lets discuss the NSX-T Management Cluster general requirements, in NSX-T Management Cluster Deployment: Part 1.

One comment