** [NOTE] – This blog is only applicable to NSX-T v2.3 and earlier.

Please see the NSX-T Architecture (Revamped): Part 2 for NSX-T v2.4 and later that talks about the new architecture introduced by VMware **

This blog post is the Part 2 and the continuation of the NSX-T Architecture: Part 1.

In NSX-T Architecture: Part 1, we discussed the NSX-T architecture and how it extends the scope from on-premises to public clouds (and vice versa) and from VMs to containers.

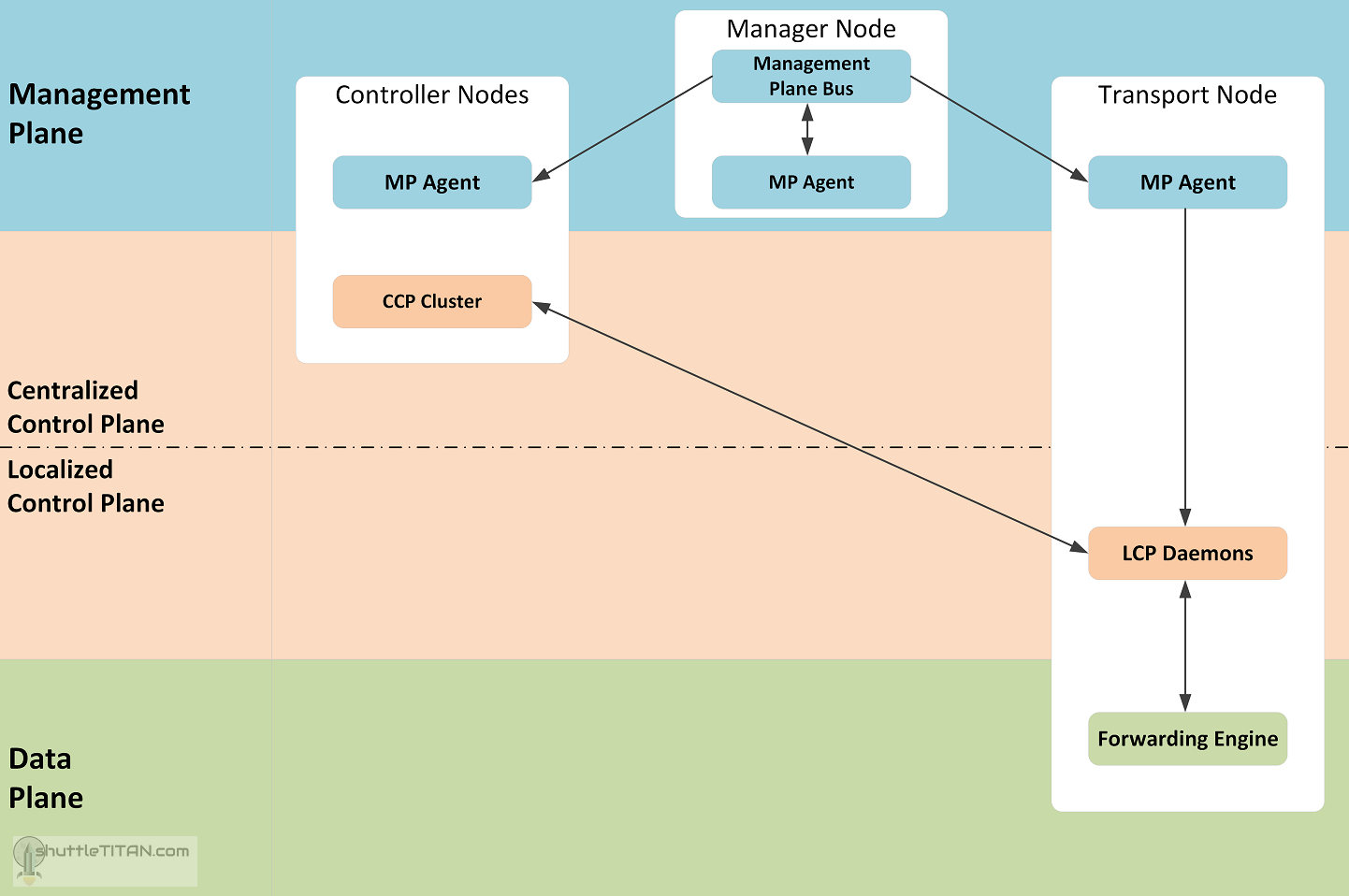

Let’s now talk about the NSX Agent communication that are acting behind the scenes in the NSX-T Architecture. There are various modules of NSX that work in perfect harmony to form a robust and reliable system. The following diagram will help visualise the key ones:

Management Plane Agent Communication

As we discussed in the previous blog here that – The Management plane provides the entry point to NSX-T Datacenter, to perform operational tasks such as configuration and monitoring of all management, control, and data plane components. It is responsible for querying recent status and statistics from the control plane, and sometimes directly from the data plane

Management Plane Agent (MPA) runs on the controller nodes and Transport Nodes which is locally and remotely accessible. On the Transport Node, MPA may perform the following tasks as requested by the NSX Manager via the Management Plane Bus:

- Configuration persistence (desired logical state)

- Input validation

- User management – role assignments

- Policy management

- Background task tracking

Transport Node is system which is capable of running an overlay, it can be a Host or an Edge Node.

Control Plane Agent Communication

Control plane provides control functions for logical switching, routing functions, etc., it is further segregated into two parts the Central Control Plane (CCP) and Local Control Plane (LCP).

CCP runs in the controller cluster nodes and is logically separated from all data plane traffic, any failure in the control plane does not affect data plane. It is responsible for providing configuration to the other controller cluster nodes and LCP.

LCP is a daemon, that runs in the Transport Node which monitors local link status and computes most ephemeral runtime state based on updates it receives from CCP and data plane, and pushes stateless configuration to forwarding engines.

Data Plane Agent Communication

Data Plane has a forwarding engine which deals with packets and its stateless forwarding following the tables/rules pushed by the control plane and handles the failover between multiple links and tunnels. It also maintains some state of features i.e. TCP termination. However, unlike the control plane where it manages the MAC/IP tunnel mapping and dictates how to forward the packets, the data plane forwarding engine is how to manipulate payload.

I hope this blog post was informative. To view step-by-step instructions of the various steps of the installation, click NSX-T Installation.